Continuous Integration at Ocient (Part 1)

How do we enable developers to write correct code fast? Unlike my last post this is going to go over a high level of what works for us and why it does rather that focus on how we implemented it.

Obligatory: the views expressed in this blog are my own and do not constitute those of my employer (Ocient).

Background

What does our CI need to accomplish?

Traditionally I think of CI as “if something passes this then it is correct”. However, proving a pull request is bug free and has no negative performance implications is an near impossible task (especially turning that around quickly). What we are aiming for at Ocient is “if it passes CI then it is good enough for another developer to use”. The distinction is key because it allows us to virtualize our database rather than run it on real hardware and make other tradeoffs in the name of speed. We give the “our product is correct” badge after QA has a chance to run a much larger suite of tests on real hardware.

In order to confirm correctness, we run automated tests which fall into a few categories.

- Language Specific Tests

- Unit Tests

- Integration Tests

- Virtualized System Tests

- System Tests

Language Specific Tests

These are C++ and Python based tests that do not need any external programs on setup to run. You can just type something like ./run_test_a and it will return an exit code of zero on success and non-zero code on failure. For C++ we use GoogleTest and for Python we use pytest to help us write these tests.

While there is no hard line between what I am calling unit and integration tests we have a massive variation in duration and resource requirements for language specific tests. The longest running tests usually happen to be the most cpu and memory intensive tests as well. I am going to call these larger tests “integration tests” since they usually spin up large parts of the system and test them integrating together.

Virtualized System Tests

These are Python based tests that test anywhere from 1-8 Ocient nodes running locally. The goal of these is to ensure our system as a whole works. In these tests the developer can load data from a various source, run SQL queries, kill a node, read monitoring data, and many more things. These are designed to be able to simulate real world scenarios (like a node crashing).

These tests require a massive amount of resources and are incredibly variable in duration and memory usage.

System Tests

These are the real deal, we run these on real clusters and can scale up to as many nodes as we have hardware for. Theses can be programmed using the same interface for virtualized system tests.

These require entire Ocient scale nodes to run.

Developer Workflow

Lets say a developer has been working on a bugfix for a bug that QA found. The developer is confident they have fixed the bug. They added a unit test to cover the fix and push up their code. What happens next?

Phase 1:

- We run some static analysis on all the code in the codebase. Much of this is cached since we use Bazel for caching the results of these tests and odds are the developer did not change too much code. We use clang-tidy, clang static analyzer, ClangFormat, and mypy.

- At the same time static analysis is running we build all things required for the types of testing described above.

- [Optional] If code is changed that can affect our release docs, we build and push up our release docs to review. This is usually not important for most PRs.

Phase 2:

- We run a client side program that decides what tests need to be run based on the level we are testing (PR means fewer tests, nighties mean all tests). The client will submit all jobs to our nomad server and wait for them to run. After all of them have run the client program will collect the results and report back.

- [Optional] If code changed that can affect our release docs, we create Ocient packaging

Duration

If we ran our tests serially they would take well over 24 hours to complete. It is a requirement to run these tests as parallel as possible since we want the turnaround for developers between submitting a PR and merging it to be as fast as possible. In a perfect world if there is enough hardware running all tests should take as long as the longest running test (the long poll). The graphs below show our testes ordered by memory usage and runtime.

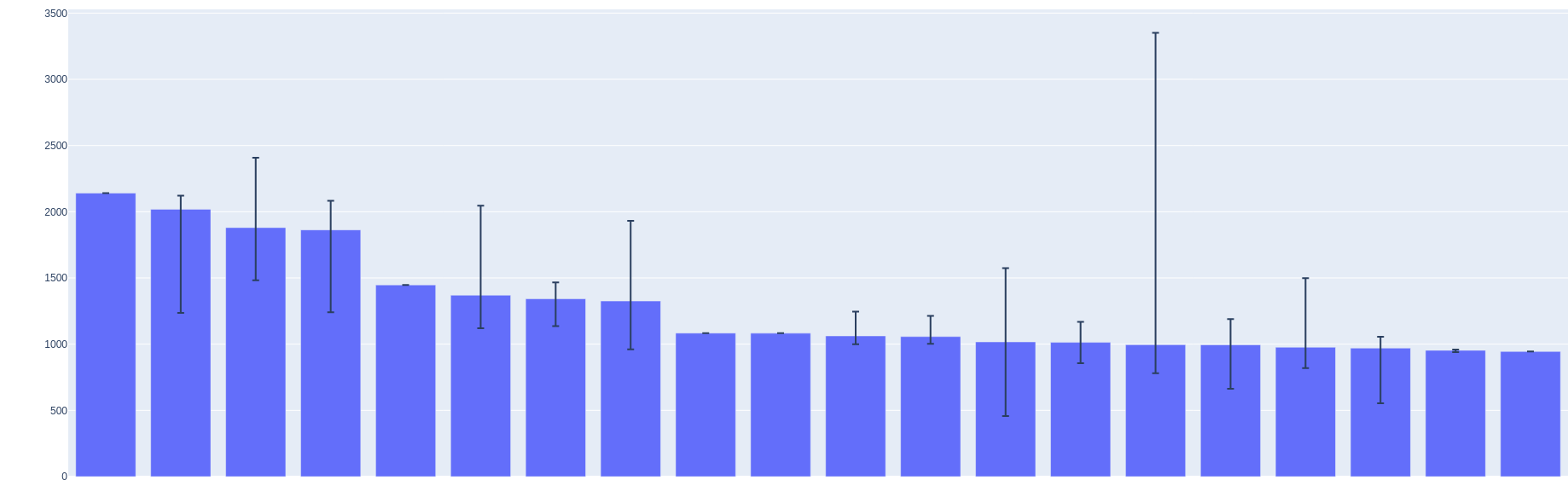

These are our top 20 longest running tests. About half are integration tests and half are virtualized system tests. You can see some variability in the duration. Units are in seconds.

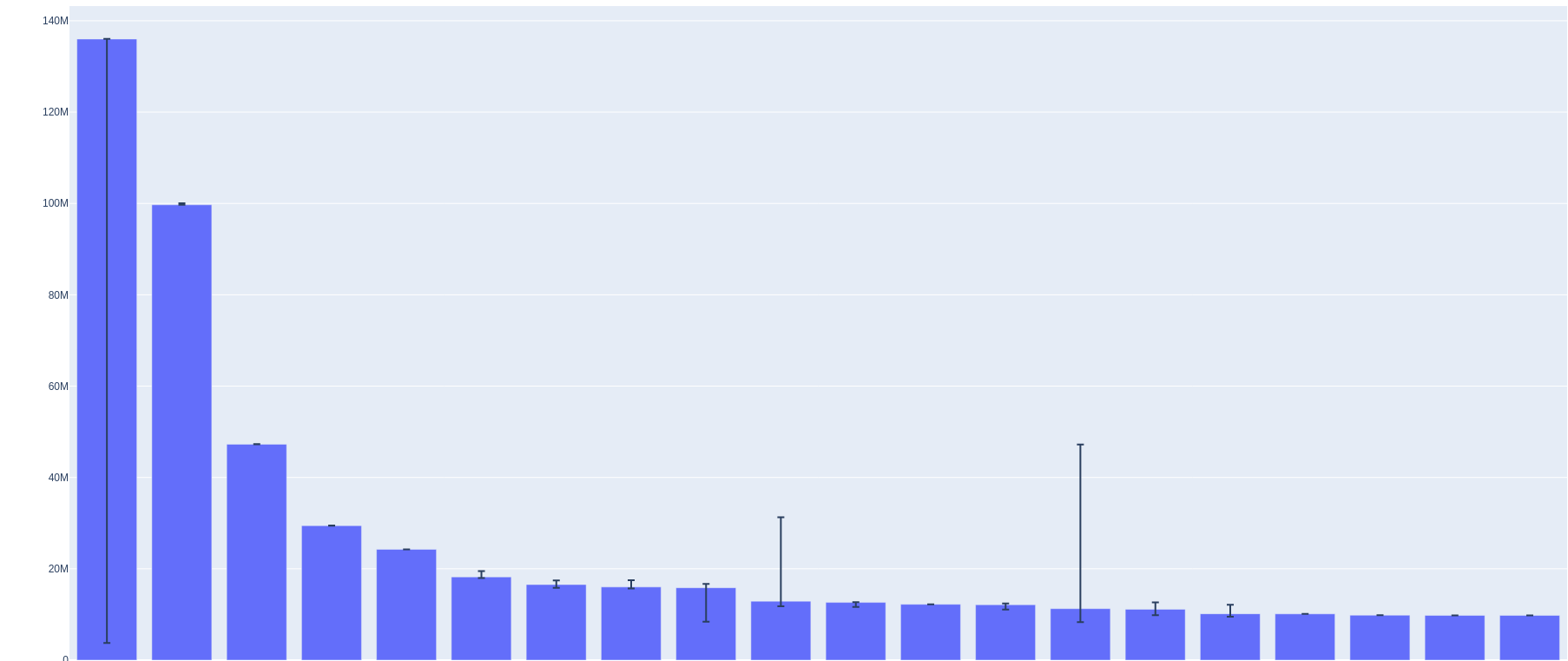

These are our top 20 tests by memory usage in KB. The test on the far left had a bug we recently fixed that causes the memory usage on that test to drop to around 2GB. The next largest test is a unit test that uses 100GB of memory!

Nomad

For those of you familiar with the “running distributed tasks problem” some popular workload orchestration tools might come to mind Nomad/Kubernetes/Docker Swarm. As the header states, we decided on Nomad. This is our crown jewel of CI. This allows us to run all tests in parallel if we have enough hardware sitting idle.

We use three tiers of workers for CI on Nomad

- Non-meta top level jobs: these are CI jobs where the computation is part of the CI job. For example, when we check that the code is formatted we run ClangFormat as part of the jobs.

- Meta top level jobs: these are lightweight jobs that create and poll other jobs. For example, this job is used when we run Virtualized System Tests and rather than spin all the tests up locally the work this job does is to submit all the jobs to Nomad. It will then collect the results along with testing artifacts.

- Leaf jobs: these are heavy jobs that are the actual tests (unit and virtualized system).

We determine the allocation required for these jobs by looking at the last invocations of the jobs and seeing how much cpu and memory they used. This way the devs do not need to fiddle too much with guessing how much memory and cpu their tests will use. Our team will occasionally look at these numbers to see if there are any tests that use far too much memory/cpu and then work with the teams that own these tests to slim them down.

Ocient is Hiring

Ocient is hiring for all sorts of roles across development. If you are interested in working on build systems or any other aspect of distributed database apply and drop me an email to let me know you applied.